How I test SQLWATCH on 100 SQL Server instances without breaking the bank.

One of the biggest challenges I face when building SQLWATCH is testing. Up until recently SQLWATCH distributed-only solution. It means that it had to be installed on each monitored server and only worked in a local scope. This made the development and testing easy as I only had to make sure that it runs on the supported versions of SQL Server.

Since introducing the central repository and central collector (coming in version 5) things got quite complicated as now not only do I need to make sure that it works on all supported SQL versions but also that it can handle multiple instances at once. Otherwise, what would be the point of a monitoring solution that cannot monitor multiple servers simultaneously?

This has been a great bottleneck. I aim for SQLWATCH Central Collector to be able to collect near-real-time data from at least 100 SQL instances. A 100 also gives me a good baseline for understanding hardware requirements for the central repository.

The collection tests are usually long-running – I want to test that the data collector can collect data from 100 instances, continuously for hours and days without experiencing memory leaks, orphaned threads etc.

Moreover, the source SQL Servers must run on a recommended minimum hardware. If I am running SQL Server on the cheapest Azure VM (1 core, 1GB of RAM) I get frequent timeouts (especially in SQL 2019) when querying DMVs with no other workload. In other words, the hardware must be good enough to not put any constraints on the SQL Server. I have therefore settled on 2 cores and 4GB of RAM as a minimum config for the monitored test instance. I would be surprised if any of you ran anything smaller in any of your environments.

And if all this was not enough, the SQL Server must be on Windows in order to test WMI interfaces.

So summarising, I need 100 test instances that will run for between 1 day to 15 days a month. Each instance must be at least 2 core and 4GB RAM to not be constrained by the hardware as it must respond to frequent DMVs queries without timeouts. They must be on Windows to provide WMI interfaces. Storage is irrelevant, a standard HDD will be fine.

The cost of running 100 SQL Servers

The test environment is by far the most expensive element of this project. I use a combination of VMs, named instances and containers in my lab.

Virtual Machines with SQL Server

I am going to use Azure in the examples below but similar pricing would apply to any other Cloud provider.

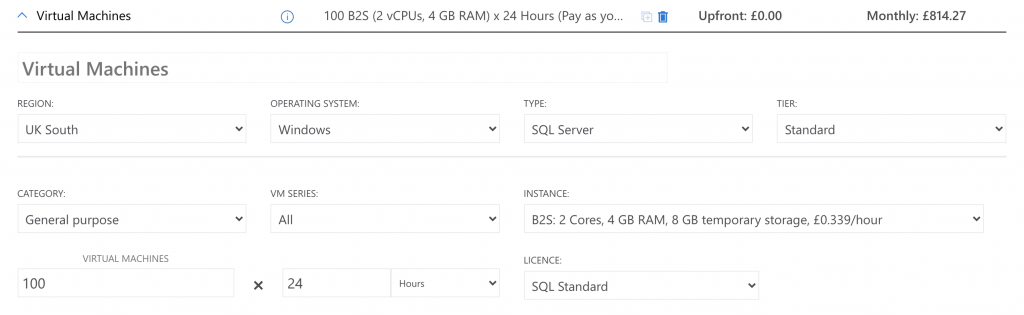

The obvious answer would be 100 Virtual Machines each with an instance of SQL Server. The problem with this approach is the cost. As of October 2021 running 100 B-series SQL Servers for a 24 hour period would cost £814 (US$1092). That is a lot for a free project.

Azure does offer highly discounted Dev/Test subscriptions but only for Visual Studio subscribers (old MSDN) which are quite expensive on their own.

24 hours a month would not be enough to make any substantial development. I really need to run at least 24 hours after every change, so could actually be 7-15 days of runtime each month. This would increase the cost significantly.

To save on storage costs, I could then delete those machines and recreate all 100 of them next time I need to do testing.

I only use this approach to test application functionality on a specific edition or version. For example to make sure I can collect performance from SQL Server 2012 Standard on Windows Server 2012, SQL Server 2014 Express on Windows Server 2016 and SQL Server 2016 Enterprise on Windows Server 2019 and so on… This option gives me the most flexibility.

Virtual Machines without SQL Server

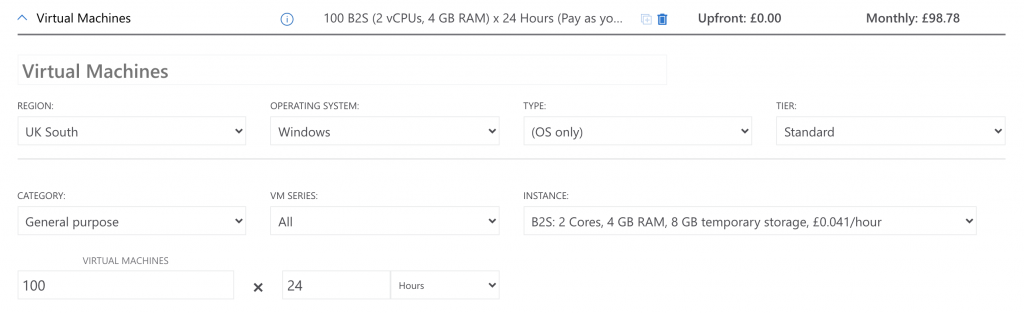

A cheaper option would be to run just Windows VM and install SQL Server Developer edition manually. This would come to £98 (US$132) for 100 Servers running for 24 hours. I would however need to write deployments scripts (either from scratch or use dbatools’ Install-DbaIstance which I blogged about some time ago: How I use dbatools to automate SQL Server installs

to install SQL Server or, do it all manually and keep all 100 servers deallocated when not used. This would however increase storage costs significantly.

There is however a catch. SQL Server Developer is essentially an Enterprise edition that offers a bit more than the Standard edition. As I want SQLWATCH to be 100% compatible with the Standard edition I should really be testing on the Standard edition but there is no “developer” option that would allow me to use it for free.

I tend to use this approach when I can get away with a SQL Server Developer edition that I need to run for a longer period of time – the Central Repository for example. However, I also have a couple of physical servers at home and in my office that I can use if I need to run a VM 24/7 so I rarely use this option in Azure.

Single VM with 100 50* SQL Named Instances

Because SQL Server is just a Windows Service and already designed to be installed as multiple instances on a single OS, this is the best option when it comes to the number of instances per VM ratio but it would require a lot of scripting to stay on top of it. If we have to reinstall the OS or want to re-provision the VM we have to start all from scratch although the Install-DbaIstance can save a lot of time

Before you all get too excited – whilst this would be the most cost-saving testing enviroment as I could pack a lot of instances onto a single VM, it is not something you should go and do in your production environment. I do not intend to run any SQL workload apart from querying DMVs.

This was the ideal setup for me but never got around to getting 100 instances running, The time required to install them even with automation and then stay on top of it (upgrades, starting, stopping etc) was just a bit too much I was willing to spend. If I ever get around to automating this in full I will write a follow-up.

* See Tim’s comment about the max number of instances on a single machine: It’s 50!

Docker Containers

Containers are certainly the future of test and DevOps pipelines for ease of use and low resource requirements. The image containing SQL Server is very small and because Docker takes the virtualisation to the next level and virtualises the OS rather than hardware, the overhead of running containers is very minimal. This has been my preferred way due to the relatively low cost – a single Linux VM (20 cores, 80GB) costs £16.92 (US$22.71) to run for 1 day.

This is the biggest VM in the cheapest B series and 80GB of RAM is the max we can get from the B-series hardware. My requirement was to be able to run each SQL Instance with 2GB of RAM so with 80GB I can only run 40 instances. The workaround would be to simply enable SWAP in Linux and go with SSD disks or run another VM and split the instances 50/50. This would double the cost to around £34 (US$45) for 1 day.

Managing this setup is a breeze. I use bash which I am relatively comfortable with and a Portainer GUI which I also blogged about some time ago: Rapid SQL Server test instances with Docker and Azure.

To create 100 SQL Server containers I wrote a little bash script that takes a couple of minutes to run:

#!/bin/bash

for i in $(seq 1 $2);

do

let "C = $i + $1"

docker run --cpus="0.5" --memory="2g" --memory-swap="4g" -e "ACCEPT_EULA=Y" -e "SA_PASSWORD=SuperDuperPassword1" -p 49$C:1433 --name SqlWatch-$C -h SqlWatch-$C -d mcr.microsoft.com/mssql/server:2019-CU11-ubuntu-20.04;

doneThere is one problem. SQL Server on Docker is Linux based and neither supports WMI. Whilst this is the easiest and the cheapest setup to run, it only allows me to test 80% of my code and the missing 20% is actually one of the most important parts – WMI. Another drawback is that Linux based SQL Servers had a problem with the ring buffer not returning the correct CPU utilisation with the biggest problem being that Linux does not provide WMI which is what SQLWATCH relies on.

Windows-based containers

Ideally, I would like a Docker-like experience but with Windows-based containers but sadly, Microsoft seems to have abandoned their Windows containers and with SQL Server images being around 18GB in size this is less than ideal from a storage perspective. However, whilst researching my options I came across Windocks which seem to be doing what I need. Although they offer a community edition for free, its limitations do not satisfy my requirements so I have asked if they want to support SQLWATCH by helping me with my test environment.

Conclusion

Hopefully, this gives you some overview of the effort that goes into SQLWATCH development and testing. If you would like to stay up to date, please subscribe to this blog. If you would like to support the project financially you can do so by sponsoring me on GitHub.